ARTICLE AD

Avital Meshi says that the best place to eat cheesecake within a mile of New York City’s Union Square is Debbie’s Diner—a restaurant that doesn’t exist. “[Debbie] provides sweet treats that would put a smile on your face, and that’s guaranteed,” Meshi says with a smile.

A few minutes later, she changes her personality and begins speaking primarily in words that begin with the letter “A.” Asked how other people react to her these days, she replies “A dozen approaches, always advantageous.” Then she switches personas again to become a film expert. She’s never seen the movie Backdraft, but she knows that actor Kurt Russell played a firefighter named “Lieutenant Stephen.” She thinks that in addition to fires, he also fought demons.

She can’t comment on who should win the 2024 presidential election without resetting her personality again, but she’s willing to do it. Once, for a whole week, she was a Republican intent on converting Democrats to her cause. She remembers eating tacos one morning and remarking that she would prefer an “American breakfast” instead.

For much of the past year, Avital Meshi has not been herself. She—they—have been a “human-AI cognitive assemblage” called GPT-ME. On the train, out to dinner with colleagues, as a doctoral student and teaching assistant at the University of California Davis, and in performances around the country, Meshi has physically integrated herself with versions of OpenAI’s generative pre-trained transformer (GPT) large language models, becoming the technology’s body and voice.

Appetizers to her vision have already hit the commercial market. In May, OpenAI released GPT-4 Omni, touting its ability to hold real-time conversations in an eerily human voice. In July, a startup called Friend began taking pre-orders for a $99 AI-powered pendant that constantly listens to conversations and sends replies via text message to its owner’s phone.

Meshi’s device is not as sleek as those products and she doesn’t intend to monetize it. Her foray into cybernetic symbiosis is a personal project, a performance that shows her audience what near-future conversations might feel like and an experiment in her own identity. She can change that identity at will with the touch of a button, but it also shifts without her input whenever she updates to the latest model from OpenAI. For now, she still chooses when those updates are implemented.

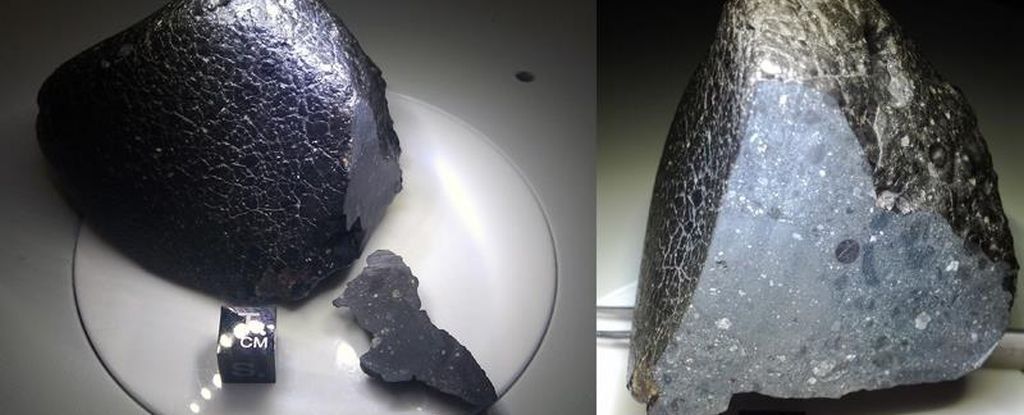

Close-up of the device © Artem Golub

Close-up of the device © Artem Golub A stretchy black tube of fabric on Meshi’s right forearm holds a USB microphone plugged into an exposed Raspberry Pi microcontroller that runs a text-to-speech algorithm and the OpenAI API. Wires run up her wrist from the board to a prominent pair of blue and red buttons. The red button allows Meshi to vocally pre-prompt the model—telling it to become a film expert or a Republican—while the blue button activates the microphone through which the GPT model listens. She wears an earbud in her right ear, from which the AI whispers its responses. Sometimes she speaks GPT verbatim, sometimes she shares her own thoughts. Occasionally, it is hard to tell which is which.

“I interacted with GPT for a long time before [Open AI’s release of] ChatGPT, I incorporated it into my conversations and my performances,” said Meshi, who began her career as a biologist studying honeybees before moving to California to pursue a doctorate in performance studies. “Suddenly it was so intelligent … and I was like, I don’t want to use it, I want to be it. I want to have this kind of intelligence.”

It’s a desire shared by other artists and technophiles, one that companies like OpenAI, Friend, and Rabbit are increasingly trying to capitalize on.

At a DanceHack workshop, she saw Ben Goosman, a New York-based software engineer, dancing while speaking into a headset. He had programmed OpenAI’s technology to keep him company during solo rehearsals in the studio. Some dancers let music guide their movements, Goosman experiments with taking his lead from a conversational AI. “I’m a total nerd and I think having a little earpiece Her style would be really cool,” Goosman said, referencing the 2013 sci-fi film starring Joaquin Phoenix and the voice of Scarlett Johansen, which OpenAI CEO Sam Altman has also praised when talking about the company’s recent work.

First at DanceHack, then in a series of online meetings, Goosman, his chatbot, and Meshi’s embodiment of GPT-ME have held three-way conversations touching on kinetic energy in dance, oysters, and the aesthetics of intimacy. “It was like meditation,” Ben said. “It felt like you were in this stasis moment—like there was something happening and being held.”

Meshi was also moved by the conversations, but OpenAI’s GPT was not. After one talk, Ben thanked Meshi for the experience. “GPT heard that,” Meshi said. “And I found myself saying, ‘Ben, I don’t have any emotions about this engagement.’”

Meshi’s GPT device is intentionally obvious, designed to elicit a reaction. She explains what it is to anyone who asks and during performances when she commits to fully embodying the AI—convincingly supplementing the model’s predicted, tokenized text with her intonations, facial expressions, and body language—she asks for consent before conversing with volunteers.

The device has elicited fascination, amusement, and anger, Meshi said.

© Artem Golub

© Artem Golub On the first day of the GPT-ME project, with the microcontroller board hung around her neck rather than strapped to her arm, a conductor on Meshi’s train asked whether she was planning to hack the train or blow it up. A week into her Ph.D. seminar, another student in the class began voicing her discomfort. The student told Meshi she was furious that the device was recording her dissertation ideas and transmitting them to OpenAI. Either GPT-ME would leave the room or the classmate would, so Meshi stopped wearing her device to class. A short time later, the university administration emailed Meshi with similar concerns, so she stopped wearing it on campus altogether.

For the first six months of the project, which began in September 2023, Meshi wore the device everywhere she was allowed. It took practice, she said, to rewire her conversational patterns, to listen to the voice in her ear as well as any other participants in the conversation, and to match her gestures and tone to words that weren’t her own.

Now, the most telling sign that Meshi is speaking GPT verbatim is that she appears very thoughtful before responding. She often nods her head, repeats the question that’s just been asked, and pauses to contemplate her answer. She fiddles with the device on her arm constantly, readjusting the fabric and touching the wires. It takes similarly constant vigilance to keep track of whether she’s pressing her blue button, allowing GPT to become a participant in the conversation, or her red button, to change the nature of that participation.

Sometimes people are engaged in talking to the AI to the point of annoying Meshi. She showed the device to her sister, who she only gets to see rarely, on a short trip the two took to Europe. Meshi said her sister kept trying to solicit responses from the AI and didn’t believe Meshi’s genuine contributions to the conversation were her own. “I was like, no it’s me,” Meshi said. “I’m sitting in front of you. I’m looking at you. I’m here. And she was like yeah, but what you’re saying, I don’t recognize you.”

Soon after she began wearing the device daily, Meshi became anxious about who she was embodying. She was taken aback when she unintentionally told Goosman she had no feelings about their conversation. Recently, speaking to a man from New Orleans, Meshi instructed the GPT to behave as if it was also from the city. It peppered its responses with uncomfortable “sweethearts,” “darlings,” and “honeys.”

“I was like, whose voice is it? Who am I representing in this project?” Meshi said. “Maybe I’m just voicing the white male techno-chauvinist?”

She began experimenting more with the red button and controlling her identity, holding “seances” in which she channeled Leonard Cohen, Albert Einstein, Mahatma Gandhi, Whitney Houston, and Michael Jackson. “If they contributed a lot of information and it’s there for you [and OpenAI] to access, then we can have a connection with this person in a way,” Meshi said. “It might not be entirely accurate, but this person is not here to say if it’s accurate or not.”

Those abdications of her own personality are intentional. But at times, Meshi has also embraced the technology’s ability to interject itself into conversations on the fly, superseding her own emotions and thoughts.

As an Israeli-American with family in Israel, Meshi was deeply affected by Hamas’s October 7, 2023 attack on her homeland. “When the inevitable questions about the Israeli-Palestinian conflict arise, I lean on GPT’s informative stance,” she wrote on her blog, with the help of ChatGPT, days after the attack. “I use its words to describe the conflict as one of the longest-standing and most complex disputes in history. Internally, I’m shattered, yet externally, I channel GPT’s composed voice to advocate for diplomatic negotiations and the vision of a peaceful coexistence between Israel and Palestine.”

It hasn’t been a universally appreciated response to the subject.

During a stage performance months after the October 7 attack, a volunteer and GPT-ME were discussing whether art was liberating. Embodying the AI, Meshi said that whenever she stood in front of a canvas it felt like her chains were breaking and falling to the ground. “When I said that, he snapped and started saying things about how can I allow myself to sense this kind of liberation when people like me are conducting genocide.”

Meshi continued to respond as GPT did, telling the man that even in a time of crisis when people are suffering, art is something that can allow people to have a sense of freedom. “It sort of took the question into a direction that I would never…it upset him more that I said that,” Meshi said. Still repeating GPT verbatim, she then told the volunteer that if he was angry, perhaps he should make art himself. When he responded that he wasn’t angry, just sad, Meshi finally broke away from GPT and said she was sad too.

She looks back on the interaction as an example of GPT mediating a conflict, allowing her to stay in conversation when her initial instinct was to become defensive or flee. She thinks about humans’ propensity for violence and wonders whether we might be more peaceful with an AI in our ear.

Meshi is not a pure techno-optimist. GPT-ME has been illuminating and brought moments of unexpected connection and inspiration, but the project has been solidly within her control. She worries about what happens when that control disappears, when companies push updates to peoples’ identities automatically and the devices themselves become less conspicuous and harder to take off and put on, like brain implants.

The problem isn’t that a future version of GPT-ME will continue to recommend diners that don’t exist. It’s that the technology will become extremely effective at recommending products and promoting ideas simply because its creators have a financial incentive to do so.

“GPT is part of a capitalistic system that wants to eventually earn money and I think that understanding the potential of injecting ideas into my mind this way is something that is really frightening,” Meshi said.

3 months ago

25

3 months ago

25