ARTICLE AD

The RTX 50-series GPUs may have been the stars of the show this CES, but AI is Nvidia’s real bread and butter. In Nvidia’s perfect world, the PCs and games would all be subsumed by AI that runs directly on your PC without cloud processing. If there were anything I wish AI could do, it would be to stop talking so much.

Instead, what I saw walking through a closed suite of Nvidia demos was a rough first draft of AI concepts. Some of its planned models could make sense with more fine-tuning. The company is working on a text-to-body motion framework for developers, though we have to see how professional animators react to the tool. Additional AI lip-syncing and “autonomous enemies” are supposed to transform the typical boss fight—where you learn then exploit a predicable pattern—into something more spontaneous for both enemies and players.

The generative AI made to renovate your classic NPC or enemy AI in games doesn’t quite stick the landing. Take, for instance, the AI “Ally” Nvidia helped create for the battle royale game PUBG: Battlegrounds. It was a silly trailer for what was essentially your usual co-op AI, but one you can issue orders to with your voice.

I asked it to talk like a pirate. The AI responded, “Ah, the pirate life. Let’s hope we don’t end up walking the plank. Stick with me, and we’ll find a buggy soon.” It’s worse to try and ascribe some personality to the AI that will talk back to you rather than the unblinking NPC buddy you can yell at for getting in your way every few seconds. I found the AI was pretty slow at helping me acquire a weapon. When I inevitably got shot and crawled toward him, pleading with him to pick me up, the bot shot absently at the enemy, then ran past into a neighboring house. I only survived because the developers offered invincibility if you got shot during the demo.

The PUBG Ally is the same asinine experience of playing co-op with a bot, except this one always talks back to you in complete sentences. Krafton also uses Nvidia’s tech to craft inZOI, a Sims-type life simulator that puts chatbots in characters’ heads. Despite the AI supposedly planning and making life choices for the in-game characters, it still looked like a dull version of The Sims, lacking most of the charm of those games. I see the vision; it’s just not there yet.

We’ve previously tried Nvidia’s ACE, or its autonomous game NPCs, at the last CES and later in 2024. Based on our previous demos, the generated voice lines are incredibly stiff and sound like they’re being read from a parody book of cyberpunk and detective genre cliches. Nvidia didn’t have an update for its AI NPCs this time; instead, the company showed off another demo in a demo of an upcoming game called ZooPunk. It’s supposedly about a rebel bunny with two laser swords on his back. How can anybody make that uncool? By adding scratchy AI voice lines to its characters.

Our demo saw Nvidia ask a character in-game to change the color of his spaceship from beige to purple. “No problem. Let’s get started,” the AI replied. Instead of purple, it made the ship orange. On the second try, the AI managed to find the right end of the color spectrum. Then, Nvidia asked it to change the decal on the ship to a narwhal fighting a unicorn. Instead, the AI-generated an image that seemed to show a narwhal doing a Lady and the Tramp spaghetti scene with a ball-shaped horned gelding.

The real showcase of Nvidia’s AI was in its desktop companion apps. First was its G-Assist, a chatbot you can use to automatically manipulate settings in Nvidia’s apps and calculate the best in-game settings for your PC’s specs. The chatbot can even give you a graph of your GPU and CPU performance levels over time, and it should run directly from your PC rather than the cloud. I would prefer it if it could adjust your settings for you rather than having to turn the knobs yourself. Still, at the very least, it is an intriguing use for AI.

But AI can’t just be intriguing. It needs to be wild. Otherwise, who cares? There were demos for an AI head made for streamers that were surprisingly rude to users. However, the real star was a talking mannequin head sitting like a gargoyle on your home desktop screen. it’s using the company’s neural faces, lip-syncing, text-to-voice, and skin models to try and make something “realistic.” In my estimation, it still lands on the wrong side of the uncanny valley.

The idea is you could talk to it like you would Copilot on Windows, but you can also drop files onto its forehead for the AI to read and regurgitate information from. Nvidia demoed this with an old PDF of the booklet that came with Doom 3 (as if to remind us when playing games on PC was as simple as buying and installing a disc). Based on the booklet, it could offer a rundown of the game’s story.

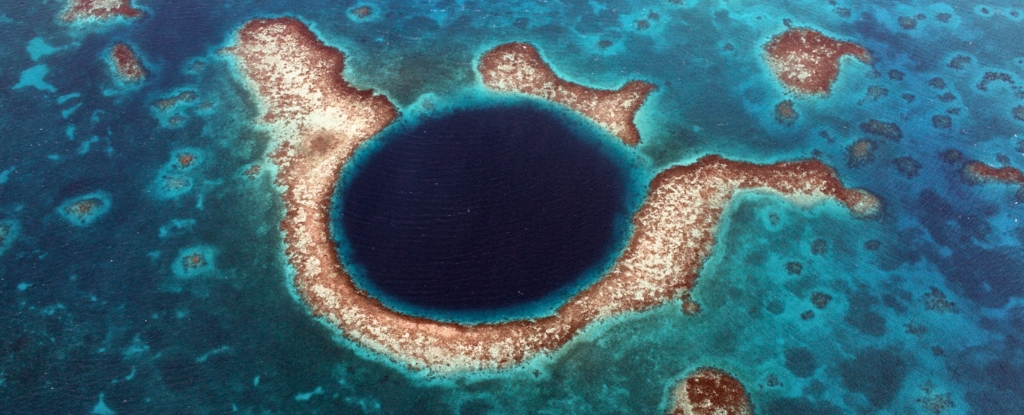

You can add a face to AI, but it will still just be a text-generation machine that doesn’t comprehend what it says. Nvidia mentioned it could add G-Assist to its animated assistant, but that wouldn’t make it any speedier, faster, or more reliable. It would still be a big black hole of AI taking up the bottom of your desktop, staring at you with lifeless eyes and an empty smile.

2 months ago

33

2 months ago

33